|

Generally, audio streaming codecs are lossy, which means that during the encoding process there is a loss of information that will turn impossible, after decoding, to recreate the original file just as it was, even if no error occurs in the transmitting channel. Despite being lossy, codecs quality aims to be transparent, so users are supposed to feel they are hearing the original uncompressed audio. This is possible by trying to adapt the compression method to the Human Auditory System (HAS) capabilities while reducing the number of bits needed to represent the audio recording.

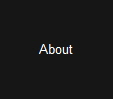

Perceptual Encoding Architecture. Time/frequency analysis uses MDCT. Psychoacoustic analysis is not standardized, so each company tries to always improve or create new tools.

Psychoacoustic Analysis

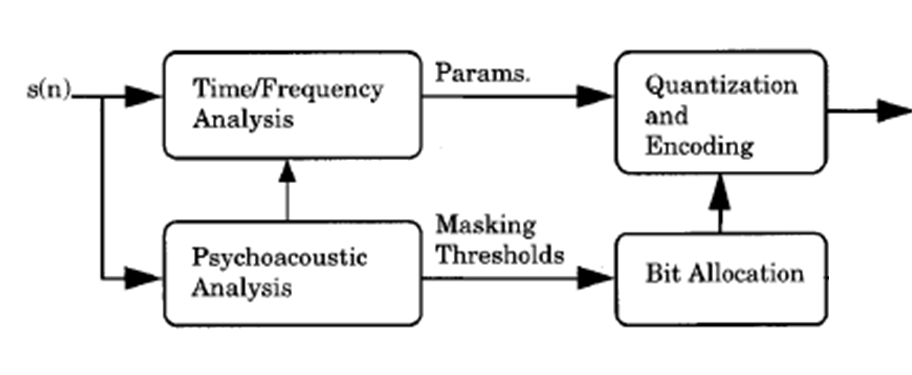

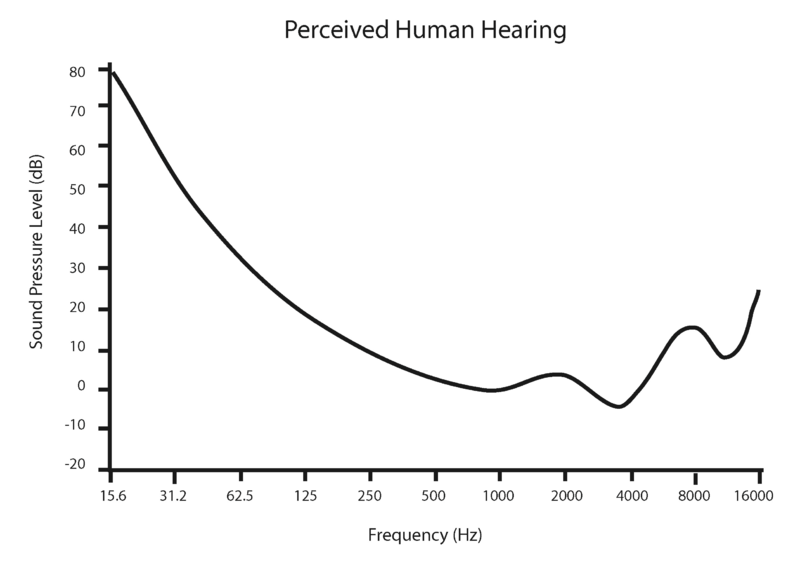

Lossy comes from exploiting irrelevance, which is done through introducing a psychoacoustic model and quantization. Irrelevance expresses itself by the frequencies and amplitudes that cannot be perceived and so can be ignored. A psychoacoustic model has the goal to exploit the perceptual characteristics of the receiver, i.e. the HAS and that is what gives the name to the encoder. First by doing audio masking, second by using perceptual coding. Audio masking means that when in the presence of a prominent sound, the perception of other sounds is affected and in some cases it seems that the background sounds are not even there, except when the prominent sound is gone. By not transmitting the sound components that are not perceived, the codec saves computational effort, but becomes lossy, because in the decoded signal that unperceived sounds won’t be there. The threshold is the representation of the minimum amplitude that can be perceived by the HAS inside the audible band of frequencies; it is called the Threshold of Hearing. There is also the Threshold of Pain which is about 120 to 140 dB. About this one the name says it all, with sounds above this intensity humans feel pain. In audio masking what happens is that the threshold rises relative to the masker sound intensity, so every sound that stays below that new threshold gets masked and can be discarded by the codec.

The Threshold of Hearing (human hearing is pretty much limited to the range between 20 Hz and 20 kHz) and an example of an Audio Masking Graph. Source: Wikipedia

After the psychoacoustic analysis is done through the masking threshold, the perceptive coding implements the basic principle of perceptual audio encoder, which is to use masking pattern of stimulus to determine the least number of bits necessary for each frequency sub-band sampling, so as to prevent the quantization noise/error from becoming audible and reducing the final coding rate.

Time/Frequency Analysis - MDCT

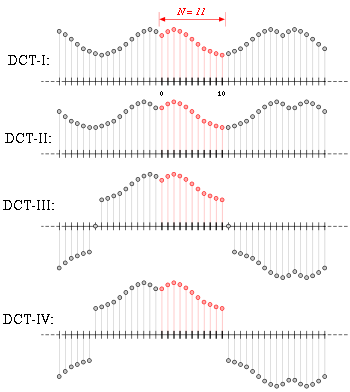

In audio signal compression, Fourier Transforms are usually the tools used by developers to do time/frequency analysis. The Modified Discrete Cosine Transform (MDCT) is a variant from the Discrete Cosine Transform (DCT). Both these transforms are Fourier-related transforms, in these cases related to the Discrete Fourier Transform (DFT), which expresses a representation of a signal by a sum of sinusoids with different frequencies and amplitudes. The DCT variants are related with two issues that only concerns discrete fourier transforms, not being a problem for the continuous ones. First, being applied to a limit domain of points it has both left and right boundaries, and that boundaries have to be determined as even or odd. Second, the point around which the function is even or odd has also to be defined. Having two possibilites for each side, even/odd (2x2), and two options for each point, e.g. dcbabcd or dcbaabcd (2x2), it makes 16 possibilities (2x2x2x2). Half of these possibilities, those in which the left boundary is even and the right is odd, are DCT variants, the remaining are types of DST (Discrete Sine Transform). The next figure is an illustration of the most used types of DCT (types I-IV), with the limited data domain, with N=11 data points, represented by the red dots. The types are even/odd combinations of DCT input data.

DCT types I-IV

Source: Wikipedia

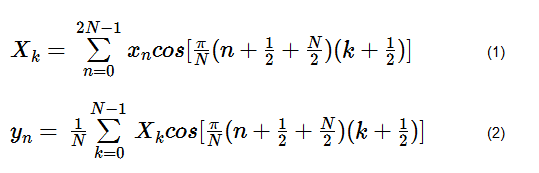

In the MDCT, based on type-IV DCT, the boundary conditions are unequivocally related to MDCT’s critical property of time-domain aliasing cancellation. From the DCT type-IV, MDCT has the additional property of being lapped. It means that since the MDCT is designed to perform on consecutive blocks of a larger dataset, subsequent blocks are overlapped so that the last half of the previous block coincides with the first half of the next block. This feature and the already mentioned energy compression qualities of the DCT, are what turn the MDCT into a very attractable tool, specifically in audio compression. The mathematical definition of MDCT follows at (1), while the inverse MDCT (IMDCT) follows at (2). There is a difference in particular that makes MDCT somehow awkward when compared to other Fourier transforms, that is that MDCT is a linear function with half as many outputs as inputs (instead of the same number): F: R2N->RN. At first glance MDCT might look noninvertible, but perfect invertibility comes from adding the overlapped IMDCT coefficients of subsequent overlapping blocks, causing the errors to cancel and the original data to be retrieved; this technique is known as time-domain aliasing cancellation (TDAC).

The TDAC term is now clear. The use of input data that extend beyond the boundaries of the logical DCT-IV causes the data to be aliased, but the good part is that it occurs in the time domain instead of the frequency domain, after the application of the IMDCT in decompression.

Quantization

Quantization is the process by which a large set of input values is mapped into a smaller set. In audio compression, quantization is used at two levels: when converting from analogue to digital; during source coding.. In the end it’s responsible for lossy data compression algorithms, where the purpose is to manage distortion within the limits of the bit rate supported by a communication channel or storage medium. A device or algorithm that performs quantization is a quantizer. In audio compression, quantization is done by quantizing the MDCT components for each band, using a defined quantization step and scale factor. Due to masking there is “space” below the masking threshold where quantization noise can be hided. The maximum values for quantization noise for each subband are defined by the masking threshold, taking for the maximum value the point where either the left or right subband boundaries equal the threshold level. In decompression, quantization has to be inverted and that’s when the losses become real, by being impossible to get the original values before quantization. The inversion is done by multiplying the quantization values with the quantization step, obtaining the MDCT components in the decoder.

Entropy Coding

Entropy coding is a traditional compression of all the bits in the file, taken together as a whole, exploiting statistical redundancy. This is extremely fast, as it utilizes a lookup table for spotting possible bit substitutions where the variable-length code table has been derived in a particular way based on the estimated probability of occurrence for each possible value of the source symbol. The length of each codeword is approximately proportional to the negative logarithm of the probability. Therefore, the most common symbols use the shortest codes. Despite different approaches are possible, the algorithms usually built trees where the end nodes correspond to symbols with less probability and so they will be represented by bigger codewords. Entropy Coding is totally lossless, in a process that is totally reversible with no losses. Decompression is simply a matter of translating the stream of prefix codes to individual byte values, usually by traversing the tree node by node as each bit is read from the input stream. However, before this can take place the tree must be reconstructed so the information to reconstruct the tree must be sent a priori. This overhead represents a very small percentage among total data, while the entire method is fundamental in a good compression. In audio compression entropy coding is done after quantization as the last process of the source coding algorithm.

Codecs

A codec has a major influence in the transmission and playback, as well as in the final price if the software and device developers have to pay royalties each time they encode and distribute it. With each one claiming to be better than the others, there are always some technical criteria who can unbalance for one or other side. Table 1 lists the most common codecs used for streaming and some of their features.

|

Codec

|

Creator

|

Release

|

Patent

|

Bitrate (kbps)

|

|

MP3

|

Fraunhofer Society

|

1993

|

Yes

|

32-320

|

|

AAC

|

MPEG Audio Committee

|

1997

|

Yes

|

16-320

|

|

Ogg Vorbis

|

Xiph.Org Foundation

|

2000

|

No

|

32-320

|

|

WMA

|

Microsoft

|

1999

|

Yes

|

32-320

|

Comparison of Audio Codecs

a. MP3

MPEG Audio Layer III (MP3) is the oldest and most well known, and that’s the main reason why it still is the most widely used codec. It is supported by an enormous number of consumer devices, so it is easy to integrate in streaming applications. MP3 owes its fame to the quality brought to audio listening by the time technology was able to exploit its potential and to the large number of equipments compatible, but not only. In audio the difference of bitstream qualities is so small that still couldn’t beat the inertia of changing to new formats and, unlike what happens in video, this keeps MP3 dominance alive.

This codec, unlike its major market opponents, is not a pure MDCT; in MP3 frequency analysis is not applied to audio signal bands directly, but rather to the output of a 32-band polyphase quadrature filter (PQF) bank. To each of the subbands created after the filter application is then applied the MDCT, which codes each of the 36 samples per band, obtaining 36 coefficients organized from the lowest to the highest frequency. These coefficients are then quantized based on a psychoacoustic model and compression ends with entropy encoding. Since its first implementation there were many improvements added and several different approaches are possible to design an MP3 codec. All the rules and specifications are on the decoder side so as long as the codec can be decoded there is no obligation to religiously follow Fraunhofer Society steps. One of those cases is the LAME project, a high quality MP3 encoder whose development started around 2003 in its modern form having a psychoacoustic model created by them. Today, LAME is considered the best MP3 encoder at mid-high bitrates and at variable-bitrates (VBR), mostly thanks to the dedicated work of its developers and the open source licensing model that allowed the project to tap into engineering resources from all around the world. Both quality and speed improvements are still happening, probably making LAME the only MP3 encoder still being actively developed. The latest release is LAME v3.99 who came out on October 2011.

Almost as important as the audio data, MP3 also supports metadata (i.e. data about data) through ID3, a metadata container that allows information such as the title, artist, album, track number, and other information about the file to be stored in the file itself. Nowadays and in a world where an user can have thousands of musics, or a huge playlist the capacity to find the one desired at the moment is a fundamental feature. To include it in the audio file, without substantially affecting his size is the main function of a metadata container. An important feature about MP3 and one of the reasons why it is not well seen by industry (despite its source coding complexity) is the lack of possibility of adding digital rights management (DRM).

b. AAC

AAC, which acronym means Advanced Audio Coding was developed to become the successor of MP3 providing higher perceived quality at lower bitrates. Firstly named MPEG-2 Audio Non-Backward Compatible (due to its incompatibility with MPEG-1 decoders) its name changed due to marketing reasons because of the negative feelings the “non-backward” statement induced in clients. However, now it also is an MPEG-4 Audio standard. Achieving its goal in performance, it is now the default audio format for all Apple devices - which puts it in a very important position in the codecs market. Between MP3 and AAC, both perceptually transparent, the main differences are the following:

- More sample frequencies (from 8 to 96 kHz) than MP3 (16 to 48 kHz);

- Up to 48 channels (MP3 supports up to two channels in MPEG-1 mode and up to 5.1 channels in MPEG-2 mode);

- Arbitrary bit-rates and variable frame length. Standardized constant bit rate with bit reservoir;

- Higher efficiency and simpler filterbank (rather than MP3's hybrid coding, AAC uses a pure MDCT);

- Higher coding efficiency for transient and stationary signals.

In AAC, wideband audio coding algorithm is built on a strategy directed to explore the Human Auditory System, while reducing the amount of data needed to represent high-quality digital audio. As previously explained, signal components that are perceptually irrelevant are discarded and redundancies in the coded audio signal are eliminated. To do so AAC goes throw signal conversion from time-domain to frequency-domain using MDCT directly applied. The frequency domain signal is then quantized based on a psychoacoustic model and encoded. To finish the process internal codes are added to prevent corruption during transmission.

AAC does not state how the encoder should be developed, instead it gives a complex toolbox to perform a wide range of operations from low bitrate speech coding to high-quality audio coding and music synthesis. This toolbox gives more flexibility to codec designers and corrects many design problems present in MP3. On the decoding side, there are strict rules and specifications, since any AAC decoder has to be able to decode any type of AAC codec. About metadata, as in MP3, AAC also allows the embedding of an ID3 tag, but unlike MP3, AAC has the support to DRM. When first adopted by Apple all the files were protected and only able to be played in the device to where have been downloaded.

c. Ogg Vorbis

Ogg Vorbis is the only fully open, non-proprietary, patent-and-royalty-free, lossy compressed audio format. Like AAC it also supplants MP3 in perceived quality, and thus its main advantage to MPEG audio profile is that it can be used without licensing fees, therefore it is often used in open source projects and commercial applications, being Spotify’s choice. Fully open means anyone can change its original code and use it as they which. The only restriction imposed by Xiph is that the encoded file must be decoded by any Ogg Vorbis decoder. Periodically, the modifications, when seen as improvements, are merged into the reference codebase. One example is the aoTuV Vorbis, a fork from Ogg Vorbis, that offers even better audio quality, mainly at low bitrates.

Vorbis is intended for sample rates from 8 kHz telephony to 192 kHz digital masters and a range of channel representations (monaural, polyphonic, stereo, quadraphonic, 5.1, ambisonic, or up to 255 discrete channels). Given 44.1 kHz (standard CD audio sampling frequency) stereo input, the encoder will produce output from roughly 45 to 500 kbit/s (32 to 500 kbit/s for aoTuV tunings) depending on the specified quality setting. Vorbis is inherently variable-bitrate (VBR), so bitrate may vary considerably from sample to sample. (It is a free-form variable-bitrate codec and packets have no minimum size, maximum size, or fixed/expected size.) Vorbis aims to be more efficient than MP3, with data compression transparency being available at lower bitrates.

Vorbis uses the MDCT for converting sound data from the time domain to the frequency domain. The resulting frequency-domain data is broken into noise floor and residue components, and then quantized and entropy coded using a codebook-based vector quantization algorithm. The decompression algorithm reverses these stages.

This codec also have an unique metadata container, since including an ID3 tag would break the Vorbis container. Vorbis comments, as it is named, allows information to be added but with the restriction of having to be short text comments and no arbitrary metadata.

Despite being non-proprietary Vorbis allows the possibility of embedding DRM. For example, Spotify’s cache files are all protected and can only be played offline when bought.

d.WMA

WMA was implemented by Microsoft as a competitor to MP3, because it could be integrated in their Windows operating systems without paying royalties. Fundamentally, WMA is a transform coder based on MDCT, somewhat similar to AAC and Vorbis. Like AAC and Ogg Vorbis, WMA was intended to address perceived deficiencies in the MP3 standard. Given their common design goals, it's not surprising that the three formats ended up making similar design choices. All three are pure transform codecs. Furthermore the MDCT implementation used in WMA is essentially a superset of those used in Ogg and AAC such that WMA IMDCT and windowing routines can be used to decode AAC and Ogg Vorbis almost unmodified. However, quantization and stereo coding is handled differently in each codec. WMA’s first version was designed for sample frequencies up to 48 kHz and two channels (stereo), but WMA Professional - came out in 2003 and is the one that can compete with AAC and Vorbis - already supports audio resolutions of up to 96 kHz and up to eight discrete channels. One have to state that WMA Pro is not compatible with WMA first versions decoders.

Metadata is also dealt in a different way than in AAC and MP3. Despite similar to ID3, Microsoft developed a proprietary container, named Advanced Systems Format (ASF), that specifies how metadata is to be handled. Metadata may include song name, track number, artist name, and also audio normalization values. This container can optionally support DRM.

|